This is the first is an ongoing series about ways Drupal can be badly misused. These are generally times someone tried to solve an otherwise interesting problem in just about the worst possible way. All of these will start with a description of the problem, how not to solve it, and then ideas about how to solve it well.

I present these at SC Drupal Users Group meetings from time to time as an entertaining way to discuss ways we can all improve our skills.

This first one was presented awhile ago now (Feb of 2015).

The Problem

The developer needed to support an existing JavaScript app with access to content in the form of Drupal nodes encoded in JSON. This is a key part of any headless Drupal project (this entire site was not headless, just one application), and in Drupal 7 and earlier there was no way to do this in core.

The Sinful Solution

Create a custom response handler within template.php that executes every time template.php is loaded.

The Code

During a routine code review of this site I found the following code in template.php:

...

function theme_name_preprocess_region(&$vars) {

if ($vars['region'] == 'header') {

$vars['classes_array'][] = 'clearfix';

}

}

if (isset($_POST['mode'])) {

if ($_POST['mode'] == 'get_fields_node') {

$node = node_load($_POST['id']);

$container = array();

$sliderCounter = count($node->field_event_slider['und']);

for ($i = 0; $i < $sliderCounter; $i++) { $field_collection_id = $node->field_event_slider['und'][$i]['value'];

$field_collection = entity_load('field_collection_item', array($node->field_event_slider['und'][$i]['value']));

$currentCollectionItem = $field_collection[$field_collection_id];

if (isset($currentCollectionItem->field_slider_image['und'][0]['uri'])) {

$container['slider'][$i]['src'] = file_create_url($currentCollectionItem->field_slider_image['und'][0]['uri']);

}

if (isset($currentCollectionItem->field_slider_caption['und'][0]['value'])) {

$container['slider'][$i]['caption'] = $currentCollectionItem->field_slider_caption['und'][0]['value'];

}

}

$focusTid = $node->field_event_type['und'][0]['tid'];

$eventTerm = taxonomy_term_load($focusTid);

$dateTid = $node->field_event_date['und'][0]['tid'];

$dateTid = taxonomy_term_load($dateTid);

$container['nid'] = $node->nid;

$container['focusTid'] = $focusTid;

$container['title'] = $node->title;

$container['focus'] = $eventTerm->name;

$container['date'] = $dateTid->name;

$container['body'] = $node->body['und'][0]['value'];

print json_encode($container);

die();

}

if ($_POST['mode'] == 'get_fields_focus') {

$focusTerm = taxonomy_term_load(substr($_POST['id'], 4));

$container['nid'] = $_POST['id'];

$container['title'] = $focusTerm->name;

$container['body'] = $focusTerm->description;

print json_encode($container);

die();

}

}

...

Notice that between the two functions is the random block of code wrapped in

if (isset($_POST['mode'])) {...}. So on every request that results in load the theme template.php is loaded and in addition to the normal parsing triggers a check to see if the page request was a POST that included a mode. It it was, we then proceed to load up a node, a couple taxonomy terms, and then encode them as JSON for response. The site sends the response and then unceremoniously dies().

Why is this so bad?

First, there is no parameter checking on the ID parameter: node_load($_POST[‘id’]). Anyone on the internet can load any node if they work out the ID. Since nodes are sequentially number, you could just start at 1 and your way up until they noticed that the site was sending the same useless response over and over. It also doesn’t send a 404 if the NID provided is invalid.

Second, no reasonable developer would expect you to hide a custom callback handler in template.php. It should be totally safe to load template.php without generating output under any condition (that should be true all non-tpl.php files).

Third, this code could run during any page request, not just the ones the application designer thought about. The request could have had Drupal do something relatively expensive before reaching this stage, and all that work was just wasted server resources – which creates an additional avenue for an attacker.

Fourth, Drupal has an exit function that actually does useful clean up and allows other modules to do the same. All that gets bypassed when you just die() midstream.

Finally, Drupal has tools to do all this. There was no reason to do this so badly.

Better Solutions

In Drupal 8 this is part of core. Enable Restful Web Services and optionally the RestUI module, and in a few minutes you can have this more or less out of the box.

In Drupal 7 are two modules that will do 90% of the work for us. If we really just want the raw node as JSON you could use Content as JSON. But often we want more control over field selection, and the option to pull in related content (which the developer in this case did, and used as his argument for the approach taken). Views Datasource gives us the power of Views to select the data and provides us a JSON (and a few other) display option.

Views Datasource based approach:

- Install Views, ctools, and Views Datasource

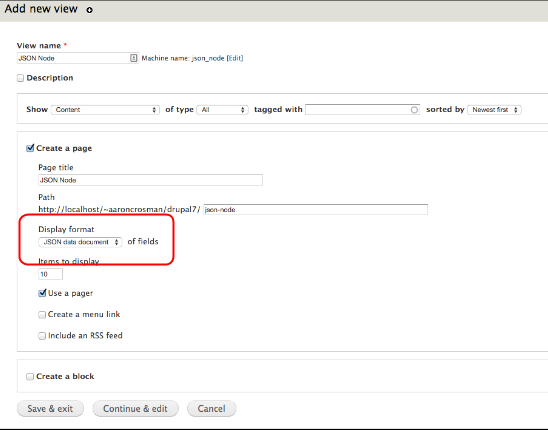

- Create your view and set the display format to JSON data document.

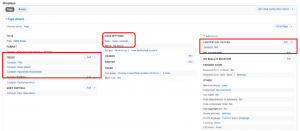

- Pick your fields, set the path, and define the contextual filter:

From there save your view and you’re done. That’s all there is to it. No custom code to maintain, you get to rely on popular community tools to handle access checking and other security concerns, and you get multiple layers of caching.

So what happened?

This sin never saw the light of day.

At the time I encountered this “solution” I worked for a company that was asked review this site while it was still under development. Our code review gave the client a chance to go back to the developer and get a fix. The developer chose a more complicated solution than the Views one presented here (they defined a custom menu router with hook_menu() and moved much of this into the callback and added a few security checks) which was good enough for the project. But I still would have done it in views: it is much faster to develop, views plays nicely with Drupal caching to help improve performance, and is a straightforward approach is easy for a future developer to maintain.

Share your sins

I’m always looking for new material to include in this series. If you would like to submit a problem with a terrible solution, please remove any personally identifying information about the developer or where the code is running (the goal is not to embarrass individuals), post them as a gist (or a similar public code sharing tool), and leave me a comment here about the problem with a link to the code. I’ll do my best to come up with a reasonable solution and share it with SC DUG and then here.

If there are security issues in the code you want to share, please report those to the site owner before you tell anyone else so they can fix it. And please make sure no one could get from the code back to the site in case they ignore your advice.