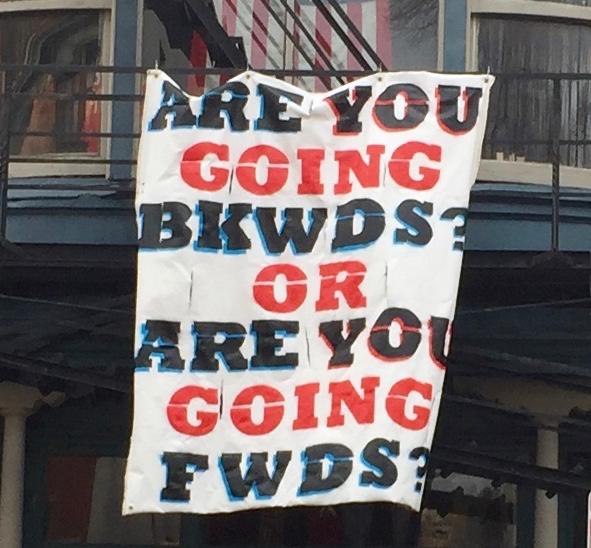

Words matter.

Grammar matters.

How we use language affects how our audience perceives us. In school teachers and professors taught many of us formal – and strict – rules for writing. Those rules are useful to know, but my point is not that you need to follow them strictly.

You need to control the language you use with intention, and create the impression you want your audience to have.

When we write at work, we are a reflection of ourselves, our team, and our companies. We want all those things to look good to our clients, customers, leadership – our audience. That is not the same goal as learning all the rules of Strunk and White. It’s about choosing the right words and structures to meeting our audience’s expectations.

To do this well, you need to understand the patterns and rules people think of as formal writing, and when to use idioms or patterns that break those rules. Sometimes it is better to follow the rules; sometimes it’s better to break them.

It’s a matter of fashion, pure and simple. People do need to be taught what the socially acceptable forms are. But what we should teach is not that the good way is logical and the way that you’re comfortable doing it is illogical. It should just be, here is the natural way, then there’s some things that you’re supposed to do in public because that’s the way it is, whether it’s fair or not.

John McWhorter

Strategic Use of Passive Voice

In college several professors demanded I never use the passive voice in my formal papers. Microsoft Word of the day backed up that assertion by flagging every passive sentence as a grammar error. In those papers they were right, since they controlled the grading standard, but those are not the rules I follow today.

Shortly after graduation my wife and I discovered the power of the passive voice when our rabbit chewed through a power cable of our brand new printer. When I called support I said: “The power cable is frayed.” They didn’t ask how the cable became frayed, they just assumed it had arrived that way and shipped us a new one. Perhaps not our most ethical moment, but a useful one in understanding the power of breaking the formal rules we’d been taught.

A simple definition of the passive voice is:

A passive construction occurs when you make the object of an action into the subject of a sentence. That is, whoever or whatever is performing the action is not the grammatical subject of the sentence.

UNC Writing Center

The classic joke is “Why was the road crossed by the chicken?” where we have clearly reversed the subject and the object of action. More commonly we leave the true actor, the person who should be the subject of the sentence, out of the sentence entirely. There are lots of discussions on the passive voice around, because it is an important concept to understand. But banning passive sentences is the wrong approach.

Passive construction makes sentences weak and frequently unclear. Without editing I will often write too many passive sentences in a post. But sometimes the weakness of passive construction helps to drive my point home.

In writing with clients over use of active voice can become accusatory. Think about the difference between “The data set provided has many errors.” vs “You provided me a data set with many errors.”

The first gives the reader room to blame a file, a process, or another cause other than themselves (which may be correct). The second points a finger. When I am trying to resolve a problem caused by bad sample data, pointing fingers, placing blame is not helpful. Sometimes we need to be clear, and identify actors explicitly. Sometimes we want to indirect to avoid creating unneeded tension.

Other Rules to Follow Strategically

In school our teachers taught many of us that paragraphs should always have three, or more, sentences that mirror the three sections of a paper: introduction, body, conclusion.

But a sentence by itself stands out and draws attention.

If every sentence were by itself they would stop standing out, so mixing emphasis and longer structures is still a good idea. The readability checker I use for my blog dislikes long sentences, preferring short choppy structures. Short punchy writing is easy to skim, but exhausting to read. Overly long sentences are hard to follow and may reveal incomplete thinking because they contain too many ideas. It’s a balance to be used carefully.

Punctuation is also taught as a series of strict rules. However use of commas, semicolons, periods, dashes, and so on are also matter of personal style. If you know the purpose of a mark you can decide when you want to use which to add emphasis and clarity to your text.

Starting sentences with conjunctions was most gracefully debated in Finding Forester (movie staring Sean Connery as an aging writer teaching a young African American boy how to become a writer):

Forrester: Paragraph three starts…with a conjunction, “and.” You should never start a sentence with a conjunction.

Jamal: Sure you can.

Forrester: No, it’s a firm rule.

Jamal: No, it was a firm rule. Sometimes using a conjunction at the start of a sentence makes it stand out. And that may be what the writer’s trying to do.

Forrester: And what is the risk?

Jamal: Well the risk is doing it too much. It’s a distraction. And it could give your piece a run-on feeling. But for the most part, the rule on using “and” or “but” at the start of a sentence is pretty shaky. Even though it’s still taught by too many professors. Some of the best writers have ignored that rule for years, including you.

More or less any rule or pattern you have been taught, you should explore when to follow and when to deviate.

Practice Until Your Comfortable

I know of no magic way to become comfortable using language carefully, except by doing it. Be thoughtful when you write. Edit carefully at every chance. Ask for feedback when available. And write in as many contexts as you can justify.

My whole point in keeping a blog, even well passed the era of independent tech blogging (and with limited care for the theme being used, SEO, or monetization) is to force myself to write regularly. I write different types of posts with different styles and attention to care. Some of that is months I’m being lazy, and some of that is very intentional.

I first wrote for this blog when I had a job that actively discouraged me from communicating well – I was told time and again that developers cannot write clearly. That’s crap: it was then, and it is now. So I started writing at least monthly to keep myself in practice.

Your practice does not need to be a blog. Find yourself some form of routine writing, and play with your style over time. Just a few suggestions of things to try:

- Letters to friends and loved ones (on actual paper and with a stamp)

- Short Stories or other fiction writing

- Contribute project documentation to an open source project

- Essays for Medium (more or less blogging with less commitment)

- Participate in an online writing challenge

- Journaling

- Take a writing course

Still More to Come

As I said in my first post on this topic, communications skills for developers and consultants is an enormous topic. I have not yet planned the series, but you should expect more to come.