Part of contributing to any open source project, or even really being a contributing member of any community, is sharing what you know. That can come in many forms. While many projects over emphasis code, and most of us understand the value of conference talks, good how-to articles are some of the most critical contributions for any software platform. There isn’t much point to a tool if people cannot figure out how to use it.

Why do I write how-to articles

I’ve contributed code to Drupal, some of it even good and useful to others. But usually when I hear someone noticed something I created it’s blog posts about how to solve a problem.

When I struggled to find the answer to a question I expect it is a candidate for a how-to post. I am not so creative that I am often solving a problem no one has, or will want to, solve for another project. And I am good enough at what I do to know that if I struggled to find an answer it was probably harder to find than it could been.

That helps me find topics for articles that are helpful to the community and benefit me.

How-to articles help others in the community use tools better

The goal of a good tutorial is to help accelerate another person’s learning process. The solution does not have to be perfect, and I know most people will have to adapt the answer to their project. I write them when I struggled to find a complete answer in one place, and so I’m hoping to provide one place that gives the reader enough to succeed.

Usually I combine practical experience earned after digging through several references at various levels of technical detail – including things like other people’s blog posts, API documentation, and even slogging through other people’s code. I then write one, hopefully coherent, reference to save others that digging extra reading.

The less time people spend researching how to do something, the more time they have to do interesting work. Better yet, it can mean more time using the tools for their actual purpose.

How-to articles serve as documentation for me, colleagues, and even clients

The best articles serve as high level documentation I can refer back to later to help me repeat a solution instead of recreating it from scratch. When I first wrote how-to articles I was solidifying my own learning, and leaving a trail for later.

They also came to serve as documentation for colleagues. When I don’t have time to sit with them to talk through a solution, or know the person prefers reading, I can provide the link to get them off and running. Colleagues have given me feedback about clarity, typos, and errors to help me improve the writing.

I have even sent posts to clients to help explain how some part of their solution was, or will be, implemented. That additional documentation of their project can help them extend and maintain their own projects.

How-To articles give me practice explaining things

One of the reasons I started blogging in the first place was to keep my writing skills sharpened. How-to articles in-particular tend to be good at helping me refine my process in specific areas. The mere act of writing them gives me practice at explaining technology and that practice pays off in trainings and future articles. If you compare my work on Drupal, Salesforce, and Electron you can see the clarity improve with experience.

How-To articles give me work samples to share

When I’ve been in job applicant mode those articles give me material to share with prospective employers. In addition to Github and Drupal.org, the how-to articles can help a hiring manager understand how I work. They show how explain things to others, how I engage in the community, and serve as samples of my writing.

How-To articles help me control my public reputation

I maintain a blog, in part, to help make sure that I have control over my public reputation. To do that I need inbound links the help maintain page rank and other similar basic SEO games.

From traffic statistics I know the most popular pages on this site are technical how-to articles. From personal anecdotes I know a few of my articles have become canonical descriptions of how to solve the problems.

When I first started my current job we had a client ask if I could implement a specific feature that he’d read about in a post on Planet Drupal. It turned out to be mine. Not only was I happy to agree to his request, it helped him trust our advice. My new colleagues better understood what this Drupal guy brought to the Salesforce team. Besides let’s be honest it’s fun when people cite your own work back at you.

Writing your own

You don’t have to maintain a whole blog to write useful how-to articles. Drupal, like most large open source projects, maintains public wiki-style documentation. Github pages allow anyone to freely publish simple articles and there are many examples of single-page articles out there. And of course there is no shortage of dedicated how-to sites that will also accept content.

The actual writing process isn’t that hard, but often people leave out steps, so I’ll share my process. This is similar to my general advice for writing instructions.

Pick your audience

It’ll be used more widely than whoever you think of, but have an audience in mind. Use that to help target a skill set. I often like to think of myself before I started whatever project inspired the article. The higher your skill set the more you should adjust down, but it’s hard to adjust too far, so be careful is aiming for people with far less experience than you have – make sure you have a reviewer with less experience check your work. Me − 1 is fine, Me − 5 is really hard to do well.

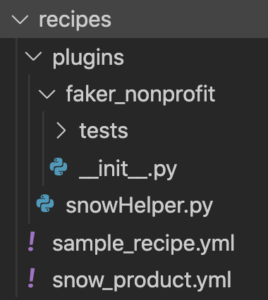

Start from the beginning and go carefully step by step

Start with no code, no setup, nothing. Then walk forward through the project one step at a time writing out each step. If you gloss over a detail because you assume your audience knows about it add reference links. You can have a copy of a reference project open but do not use it directly; it’s there to prevent you from having to re-research everything.

List your assumptions as you go

Anything that you need to have in place but don’t want to describe (like installing Drupal into a local environment, creating a basic module, installing Node, etc) state as an explicit assumption so your reader starts in the same place as you do. Provide links for any assumptions which are likely hard for your expected audience to complete. This is your first check point – if there are no good references to share, start from where that article you cannot find should start (or consider writing that article too).

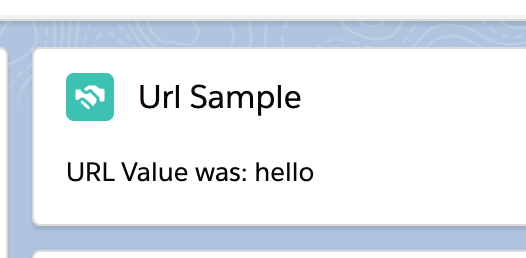

Provide detailed examples

Insert code samples, screenshots, or short videos as you progress. Depending on what you are doing in your article the exact details of what works best will vary. Copy and paste as little reference code as possible. This helps you avoid accidentally copying details that may be revealing of a specific project’s details.

If you look at mine you’ll see a lot of places where I include comments in sample code that say things like “Do useful stuff”. That is usually a hint that whoever inspired the article had interesting, and perhaps proprietary, ideas in that section of code (or at least I worried they would think it was interesting). I also try to add quick little asides in the code samples to help people pay attention.

Test as you go

Make sure your directions work without that reference project you’re not sharing. This is both so your directions work properly and further insulation against accidentally sharing information you ought not share.

End with a full example

If you end up with a bunch of code that you’ve introduced piecemeal, provide a complete project repo or gist at the end. You’ll see some of my articles end in all the code being displayed from a gist, and others link to a full repository. Far too many people simply copy and paste code from samples and then either use it blindly or get stuck. Moving it to the end helps get people to at least scan the actual directions along the way.

Give credit where credit is due

If you found partial answers in several places during your initial work, thank those people with links to their articles. Everyone who publishes online likes a little link-love and if the article was helpful to you it may be helpful to others. Give them a slight boost.